CMAKE UBUNTU 20.04 INSTALL

OpenVINO™ Development Tools (pip install openvino-dev) are currently being deprecated and will be removed from installation option and distribution channels with 2025.0. Lastly, you can scale faster by delegating deployment to remote hosts via gRPC/REST interfaces for distributed processing.

You also benefit from seamless model management and version control, as well as custom logic integration with additional calculators and graphs for tailored AI solutions. Easily integrate with OpenVINO Runtime and OpenVINO Model Server to enhance performance for faster AI model execution. Integration with MediaPipe – Developers now have direct access to this framework for building multipurpose AI pipelines. This new generation of Intel CPUs is tailored to excel in AI workloads with a built-in inference accelerators.

NEW: Support for Intel® Core™ Ultra (codename Meteor Lake).

More portability and performance to run AI at the edge, in the cloud or locally. SmoothQuant method has been added for more accurate and efficient post-training quantization for Transformer-based models. Neural Network Compression Framework (NNCF) now includes an 8-bit weights compression method, making it easier to compress and optimize LLM models. Furthermore, memory reuse and weight memory consumption for dynamic shapes have been improved. Improved LLMs on GPU – Model coverage for dynamic shapes support has been expanded, further helping the performance of generative AI workloads on both integrated and discrete GPUs.

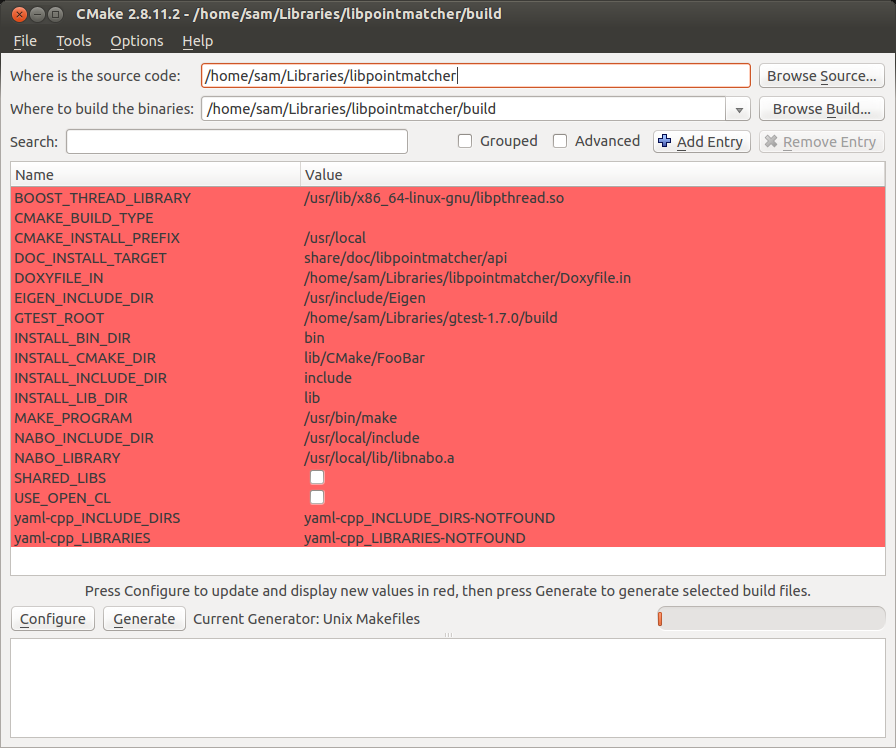

CMAKE UBUNTU 20.04 CODE

Models used for chatbots, instruction following, code generation, and many more, including prominent models like BLOOM, Dolly, Llama 2, GPT-J, GPTNeoX, ChatGLM, and Open-Llama have been enabled. New PyTorch auto import and conversion capabilities have been enabled, along with support for weights compression to achieve further performance gains.īroader LLM model support and more model compression techniquesĮnhanced performance and accessibility for Generative AI: Runtime performance and memory usage have been significantly optimized, especially for Large Language models (LLMs). OpenVINO serves as a runtime for inferencing execution. Optimum Intel – Hugging Face and Intel continue to enhance top generative AI models by optimizing execution, making your models run faster and more efficiently on both CPU and GPU.

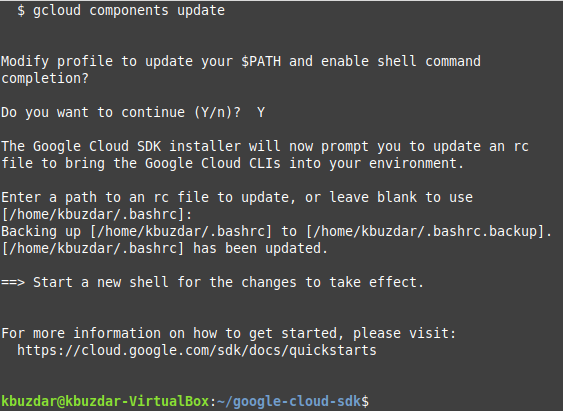

CMAKE UBUNTU 20.04 WINDOWS

This feature has also been integrated into the Automatic1111 Stable Diffusion Web UI, helping developers achieve accelerated performance for Stable Diffusion 1.5 and 2.1 on Intel CPUs and GPUs in both Native Linux and Windows OS platforms. pile (preview) – OpenVINO is now available as a backend through PyTorch pile, empowering developers to utilize OpenVINO toolkit through PyTorch APIs. You can continue to make the most of OpenVINO tools for advanced model compression and deployment advantages, ensuring flexibility and a range of options. Additionally, users can automatically import and convert PyTorch models for quicker deployment. You’ve got more options and you no longer need to convert to ONNX for deployment. Developers can now use their API of choice - PyTorch or OpenVINO for added performance benefits. NEW: Your PyTorch solutions are now even further enhanced with OpenVINO. More Generative AI options with Hugging Face and improved PyTorch model support. New and Changed in 2023.1 Summary of major features and improvements

0 kommentar(er)

0 kommentar(er)